AI and Cybersecurity: Transforming the Landscape in 2026

- GK

- Nov 8, 2025

- 4 min read

Updated: Dec 23, 2025

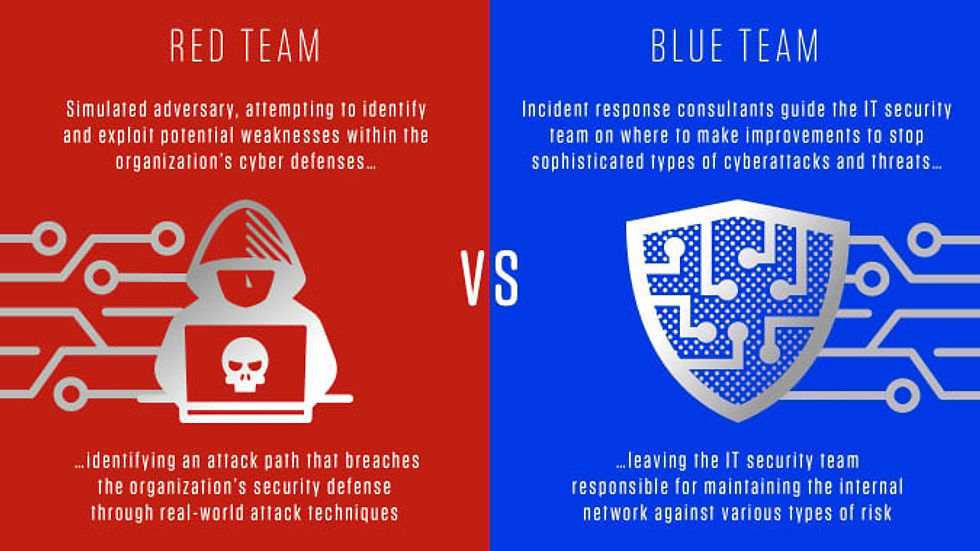

In 2026, artificial intelligence isn’t just augmenting cybersecurity — it’s redefining it. Red teams (ethical hackers who simulate attacks) and blue teams (defenders who detect and respond) are increasingly powered by AI systems. These systems automate, scale, and optimize operations.

The result? A faster, smarter, and more adaptive cybersecurity ecosystem. However, this complexity also introduces new risks. This post explores how AI empowers both sides of the security equation, the emerging purple team collaborations that unite them, and the governance needed to keep innovation secure.

How AI Empowers Blue Teams (Defense)

For blue teams, AI acts as an intelligent force multiplier. By analysing terabytes of telemetry data, it can detect anomalies, correlate signals across hybrid environments, and recommend real-time responses — all faster than human analysts ever could.

Modern AI systems integrate directly with frameworks like MITRE ATT&CK. They map attacker behaviour to defensive controls. The payoff is measurable: shorter Mean Time to Detect (MTTD) and Mean Time to Respond (MTTR), improved situational awareness, and stronger threat containment.

Leading Tools for Blue Teams

| Tool | Key Features | Benefits | Challenges |

|------|--------------|----------|------------|

| ThreatKG | Builds knowledge graphs from threat intel using NLP/ML | Automates context building and detection | Relies on unstructured data quality |

| Adversarial Robustness Toolbox (ART) | Tests ML models against adversarial attacks | Hardens AI defenses | Requires ML expertise |

| AIJack | Simulates privacy and evasion attacks | Tests systems pre-deployment | Limited to AI-specific threats |

| PyRIT (Defensive Mode) | Identifies generative AI risks | Improves model safety | Narrow focus on generative models |

| CAI Blue Agent | Automates asset discovery and vulnerability assessment | Enhances efficiency in hybrid environments | Risk of false positives |

Despite these advantages, challenges persist. Nearly 71% of blue teams report alert fatigue from false positives. Additionally, 80% cite a need for AI literacy and data science training. Adopting frameworks like the AI Bill of Materials (AIBOM) and runtime monitoring is becoming standard to secure AI-driven pipelines.

How AI Amplifies Red Teams (Offense)

AI gives red teams the ability to simulate real-world adversaries with unprecedented realism. Through automation, red teams can scale reconnaissance, exploit generation, phishing campaigns, and even polymorphic malware creation — all within controlled, ethical environments.

AI-based tools now predict exploitable weaknesses, generate payloads, and assess system resilience against APT-level tactics. However, these same capabilities underscore the ethical imperative: red teams must balance innovation with strict guardrails to avoid misuse.

Key AI Tools for Red Teams

PenTest++

Automates reconnaissance and exploitation | Speeds up simulations | Still in early development

CIPHER

AI-guided penetration testing assistant | Supports new testers | Limited by training data

CAI Red Agent

Handles enumeration, exploitation, escalation | Full automation of test chains | Potential misuse if unsupervised

WormGPT

Generates contextual phishing emails | Mimics human tone effectively | Raises ethical concerns

PolyMorpher

Creates polymorphic malware | Tests AV evasion | Requires careful scope control

A common workflow combines AutoGPT for asset discovery, WormGPT for phishing, and LLaMA 2 for exploit creation. This enables end-to-end simulations. Used responsibly, these tools allow teams to uncover and fix weaknesses before real attackers do.

The Rise of Purple Teams: AI-Driven Collaboration

Purple teams — where red and blue teams collaborate — are leveraging AI to unify offensive insight with defensive intelligence. AI enables continuous, adaptive testing, allowing defenders to learn directly from simulated attacks.

This approach improves containment metrics, bridges operational silos, and enhances resilience. Conferences such as UniCon Fall ‘25 highlight this “AI-assisted fusion” as the next evolution in threat simulation and cyber readiness.

Challenges, Risks, and the Road Ahead

AI’s rapid adoption introduces new classes of risk:

Model Hallucinations (32.5%) — AI fabricating false indicators or vulnerabilities.

Shadow AI (10.7%) — Unauthorized use of unsanctioned AI tools.

Skills Gaps (82%) — Lack of cross-trained professionals who understand both cybersecurity and AI.

Governance and training must evolve accordingly. Reports like AvePoint’s State of AI and Fortinet’s Global Skills Gap call for greater transparency, cross-disciplinary education, and continuous validation of AI models.

| Report | Key Findings | Implications | Recommendations |

|--------|--------------|--------------|-----------------|

| AvePoint (2025) | 75% AI-related breaches | Flaws in data quality and model governance | Adopt AIBOM, red team testing |

| Fortinet (2025) | 82% report AI skills gap | Operational strain in SOCs | Invest in AI literacy |

| Black Duck (2025) | 97% open-source AI use | False positives, unvetted dependencies | Strengthen model validation |

The next frontier involves autonomous red agents, post-quantum AI simulations, and AI literacy as a cybersecurity core skill. Organizations that blend innovation with ethical oversight will lead this transformation. They will secure not just systems, but the AI engines driving them.

Key Takeaways

AI is transforming defensive (blue team) operations through real-time threat detection, anomaly analysis, and rapid response capabilities.

Offensive (red team) simulations increasingly rely on AI for automated exploit development, phishing, and reconnaissance — demanding strong ethical oversight.

“Purple team” collaboration, where red and blue teams work together using AI, bridges skill gaps and strengthens overall resilience.

New vulnerabilities arise with AI adoption — including data quality issues and model hallucinations — emphasising the need for governance and accountability.

Advanced AI tools like Claude Sonnet 4.5 now rival human experts in vulnerability detection, reshaping both offence and defence strategies.

Final Thoughts

AI has become both sword and shield in cybersecurity. It empowers defenders to detect and respond faster while arming attackers — and ethical hackers — with smarter tools. The difference lies in governance, collaboration, and human judgment.

As red, blue, and purple teams converge through AI, 2025 marks not just a technological shift, but a philosophical one: the move from reactive security to intelligent resilience.

Additional Reading:

9 AI Enabled Cybersecurity Tools in 2025 - Packetlabs: https://www.packetlabs.net/posts/9-ai-enabled-cybersecurity-tools-in-2025/

The Future of Cybersecurity: Modernizing Red and Blue Team ...: https://insights.pecb.com/future-cybersecurity-red-blue-team-strategies-2025/

Three Reports Define 2025's AI Cybersecurity Reality - SecureWorld: https://www.secureworld.io/industry-news/2025-ai-cybersecurity-reality

Top 6 AI Red Teaming Services in 2025 - Mend.io: https://www.mend.io/blog/best-ai-red-teaming-services-top-6-services/

How cybersecurity red teams can help keep AI safe - edX: https://www.edx.org/resources/can-cybersecurity-red-teams-keep-ai-safe

Red Teaming with AI in 2025 | How Ethical Hackers Use Artificial ...: https://www.webasha.com/blog/red-teaming-with-ai-how-ethical-hackers-use-artificial-intelligence-for-cybersecurity-testing

Blue Team vs Red Team: AI Security's New Battleground - VerityAI: https://verityai.co/blog/blue-team-red-team-ai-security-battleground

Autonomous AI-Powered Red Teaming for Enhanced Cybersecurity: https://www.zintellect.com/Opportunity/Details/ICPD-2025-12

Red Teaming in 2025: The Bleeding Edge of Security Testing: https://www.cycognito.com/learn/red-teaming/

Ultimately, the College of Contract Management offers a comprehensive combination of flexibility, industry relevance, interactive learning, and reliable support. Its live-online model enhances the learner experience, helping students feel connected, informed, and motivated. For professionals seeking to advance their careers without interrupting their lives, the college remains one of the most effective and accessible options available today.